Welcome to the introductory practical module of our interactive Computational Fluid Dynamics (CFD) course. This course, requiring only basic programming skills and foundational knowledge in fluid mechanics and differential equations, is conducted entirely in Python. Beginners in Python will learn as we progress through the course.

In this Jupyter notebook, we’ll start developing a Navier-Stokes solver in Python. Don’t worry if some concepts aren’t clear initially; we’ll explore them thoroughly as we go along. After completing this notebook, try writing your own code in Python or in a fresh Jupyter notebook.

Note: It’s assumed that you have initiated the Jupyter notebook server with the command: jupyter notebook.

Step 1: 1-D Linear Convection#

The 1-D Linear Convection equation is the simplest, most basic model that can be used to learn something about CFD. It is surprising that this little equation can teach us so much! Here it is:

With given initial conditions (understood as a wave), the equation represents the propagation of that initial wave with speed \(c\), without change of shape. Let the initial condition be \(u(x,0)=u_0(x)\). Then the exact solution of the equation is \(u(x,t)=u_0(x-ct)\).

We discretize this equation in both space and time, using the Forward Difference scheme for the time derivative and the Backward Difference scheme for the space derivative. Consider discretizing the spatial coordinate \(x\) into points that we index from \(i=0\) to \(N\), and stepping in discrete time intervals of size \(\Delta t\).

From the definition of a derivative (and simply removing the limit), we know that:

Our discrete equation, then, is:

Where \(n\) and \(n+1\) are two consecutive steps in time, while \(i-1\) and \(i\) are two neighboring points of the discretized \(x\) coordinate. If there are given initial conditions, then the only unknown in this discretization is \(u_i^{n+1}\). We can solve for our unknown to get an equation that allows us to advance in time, as follows:

Now let’s try implementing this in Python.

We’ll start by importing a few libraries to help us out.

numpyis a library that provides a bunch of useful matrix operations akin to MATLABmatplotlibis a 2D plotting library that we will use to plot our resultstimeandsysprovide basic timing functions that we’ll use to slow down animations for viewing

It is a conservative discretization scheme, discreitized from the conservative form of the equation.

# Remember: comments in python are denoted by the pound sign

import numpy as np #here we load numpy and create a shortcut np

from matplotlib import pyplot #here we load matplotlib

import time, sys #and load some utilities

#this makes matplotlib plots appear in the notebook (instead of a separate window)

%matplotlib inline

Now let’s define a few variables; we want to define an evenly spaced grid of points within a spatial domain that is 2 units of length wide, i.e., \(x_i\in(0,2)\). We’ll define a variable nx, which will be the number of grid points we want and dx will be the distance between any pair of adjacent grid points.

nx = 61 # try changing this number from 41 to 81 and Run All... What happens?

dx = 2 / (nx-1) # delta x

dx

0.03333333333333333

nt = 20 #nt is the number of timesteps we want to calculate

dt = 0.025 #dt is the number of time that each timestep covers (delta t)

c = 1 #assume wavespeed of c = 1

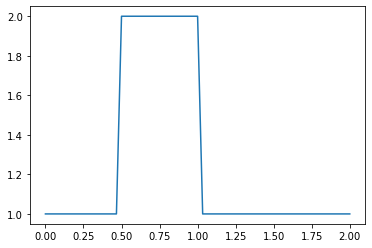

We also need to set up our initial conditions. The initial velocity \(u_0\) is given as \(u = 2\) in the interval \(0.5 \leq x \leq 1\) and \(u = 1\) everywhere else in \((0,2)\) (i.e., a hat function).

Here, we use the function ones() defining a numpy array which is nx elements long with every value equal to 1.

u = np.ones(nx) #numpy function ones()

u[int(.5 / dx):int(1 / dx + 1)] = 2 #setting u = 2 between 0.5 and 1 as per our I.C.s

print(u)

#print(np.linspace(0, 2, nx))

[1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 2. 2. 2. 2. 2. 2. 2. 2. 2.

2. 2. 2. 2. 2. 2. 2. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.

1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1.]

int(3.9 / 2) # int() truncates the decimal part

1

Now let’s take a look at those initial conditions using a Matplotlib plot. We’ve imported the matplotlib plotting library pyplot and the plotting function is called plot, so we’ll call pyplot.plot. To learn about the myriad possibilities of Matplotlib, explore the Gallery of example plots.

Here, we use the syntax for a simple 2D plot: plot(x,y), where the x values are evenly distributed grid points:

pyplot.plot(np.linspace(0, 2, nx), u);

Why doesn’t the hat function have perfectly straight sides? Think for a bit.

Now it’s time to implement the discretization of the convection equation using a finite-difference scheme.

For every element of our array u, we need to perform the operation \(u_i^{n+1} = u_i^n - c \frac{\Delta t}{\Delta x}(u_i^n-u_{i-1}^n)\)

We’ll store the result in a new (temporary) array un, which will be the solution \(u\) for the next time-step. We will repeat this operation for as many time-steps as we specify and then we can see how far the wave has convected.

We first initialize our placeholder array un to hold the values we calculate for the \(n+1\) timestep, using once again the NumPy function ones().

Then, we may think we have two iterative operations: one in space and one in time (we’ll learn differently later), so we’ll start by nesting one loop inside the other. Note the use of the nifty range() function.

un = np.ones(nx) # initialize a temporary array

for n in range(nt): # loop n from 0 to nt-1, so it will run nt times

un = u.copy() # copy the existing values of u into un

for i in range(1, nx): # change the range to 0 to nx, and see what happens

u[i] = un[i] - c * dt / dx * (un[i]-un[i-1])

When we write: for i in range(1,nx) we will iterate through the u array, but we’ll be skipping the first element (the zero-th element). Why?

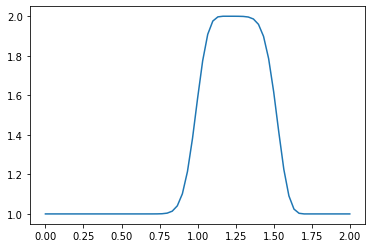

pyplot.plot(np.linspace(0, 2, nx), u);

OK! So our hat function has definitely moved to the right (its center from 0.75 to 1.25), but it’s no longer a hat. What’s going on?

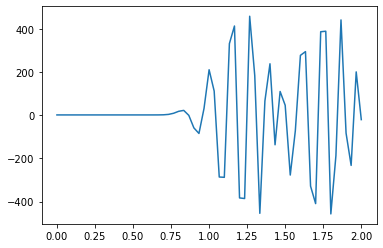

Another, more severe issue arises when changing the value of ‘c’ from 1 to -1, resulting in the solver experiencing instability. What’s going on?

c = -1

un = np.ones(nx) # initialize a temporary array

for n in range(nt): # loop n from 0 to nt-1, so it will run nt times

un = u.copy() # copy the existing values of u into un

for i in range(1, nx): # change the range to 0 to nx, and see what happens

u[i] = un[i] - c * dt / dx * (un[i]-un[i-1])

pyplot.plot(np.linspace(0, 2, nx), u);

Accuray of finite difference schemes (optional)#

Taylor expansions are instrumental in assessing the accuracy of finite difference schemes, specifically how the rate of error reduction corresponds to a decrease in cell size:

\(u(x+\Delta x)=u(x)+\Delta x \frac{\partial u}{\partial x}\big|_x+ \frac{\Delta x^2}{2!}\frac{\partial^2 u}{\partial x^2}\big|_x+\frac{\Delta x^3}{3!}\frac{\partial^3 u}{\partial x^3}\big|_x+...+\frac{\Delta x^n}{n!}\frac{\partial^n u}{\partial x^n}\big|_x+...\)

\(u(x-\Delta x)=u(x)-\Delta x \frac{\partial u}{\partial x}\big|_x+ \frac{\Delta x^2}{2!}\frac{\partial^2 u}{\partial x^2}\big|_x-\frac{\Delta x^3}{3!}\frac{\partial^3 u}{\partial x^3}\big|_x+...+\frac{\Delta x^n}{n!}\frac{\partial^n u}{\partial x^n}\big|_x-...\)

Reorganize the first Taylor expansion, we get the Forward Differencing scheme (1st order accuracy): \(\frac{\partial u}{\partial x}\big|_x=\frac{u(x+\Delta x)-u(x)}{\Delta x}+\frac{\Delta x}{2!}\frac{\partial^2 u}{\partial x^2}\big|_x+...=\frac{u(x+\Delta x)-u(x)}{\Delta x}+O(\Delta x)\)

Reorganize the second Taylor expansion, we get the Backward Differencing scheme (1st order accuracy): \(\frac{\partial u}{\partial x}\big|_x=\frac{u(x)-u(x-\Delta x)}{\Delta x}+\frac{\Delta x}{2!}\frac{\partial^2 u}{\partial x^2}\big|_x-...=\frac{u(x)-u(x-\Delta x)}{\Delta x}+O(\Delta x)\)

Substract the second one from the first one, we get the Central Differencing scheme (2nd order accuracy): \(u(x+\Delta x)-u(x-\Delta x)=2\frac{\partial u}{\partial x}\big|_x+2\frac{\Delta x^3}{3!}\frac{\partial^3 u}{\partial x^3}\big|_x+...\)

\(\frac{\partial u}{\partial x}\big|_x=\frac{u(x+\Delta x)-u(x-\Delta x)}{2\Delta x}+2\frac{\Delta x^2}{3!}\frac{\partial^3 u}{\partial x^3}\big|_x+...=\frac{u(x+\Delta x)-u(x-\Delta x)}{2\Delta x}+O(\Delta x^2)\)